LangChain vs AutoGen vs CrewAI: Architectural Patterns for Production Agent Systems

Comprehensive technical comparison of three leading agent frameworks, examining architectural patterns, performance characteristics, and real-world deployment considerations for enterprise AI agent systems.

LangChain vs AutoGen vs CrewAI: Architectural Patterns for Production Agent Systems

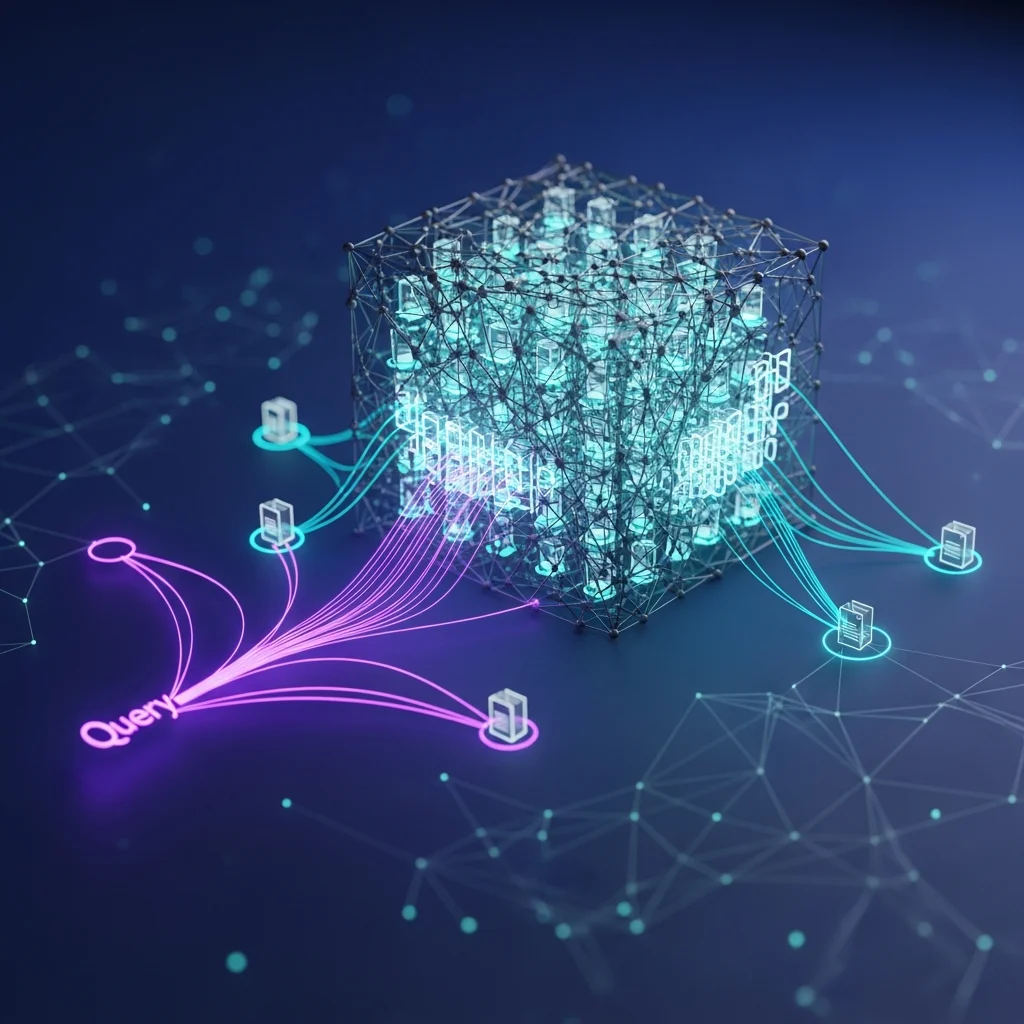

In the rapidly evolving landscape of AI agent frameworks, three major contenders have emerged as production-ready solutions: LangChain, AutoGen, and CrewAI. Each framework embodies distinct architectural philosophies, trade-offs, and deployment patterns that significantly impact their suitability for enterprise applications. This technical deep-dive examines the core architectural patterns, performance characteristics, and real-world implementation considerations for software engineers and architects evaluating these frameworks.

Core Architectural Philosophies

LangChain: The Composable Foundation

LangChain operates on a modular, composable architecture where agents are built from reusable components. The framework’s design centers around the concept of “chains” - sequences of operations that transform inputs through various processing steps.

from langchain.agents import initialize_agent, AgentType

from langchain.llms import OpenAI

from langchain.tools import Tool

# LangChain's modular approach

llm = OpenAI(temperature=0)

tools = [

Tool(

name="Calculator",

func=lambda x: str(eval(x)),

description="Useful for mathematical calculations"

)

]

agent = initialize_agent(

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True

)Key Architectural Characteristics:

- Composition over Inheritance: Components are designed to be mixed and matched

- Pluggable Backends: Support for multiple LLM providers and vector stores

- Memory Management: Sophisticated memory systems for conversation context

- Tool Abstraction: Unified interface for external integrations

AutoGen: The Multi-Agent Conversation Engine

AutoGen’s architecture revolves around conversational agents that collaborate through structured dialogues. The framework emphasizes agent-to-agent communication patterns and role specialization.

from autogen import AssistantAgent, UserProxyAgent, GroupChat, GroupChatManager

# AutoGen's conversational approach

user_proxy = UserProxyAgent(

name="UserProxy",

human_input_mode="NEVER",

max_consecutive_auto_reply=10,

)

engineer = AssistantAgent(

name="Engineer",

system_message="You are a software engineer. Write and test code.",

llm_config={"config_list": config_list}

)

scientist = AssistantAgent(

name="Scientist",

system_message="You are a scientist. Analyze data and provide insights.",

llm_config={"config_list": config_list}

)

groupchat = GroupChat(

agents=[user_proxy, engineer, scientist],

messages=[],

max_round=12

)

manager = GroupChatManager(groupchat=groupchat, llm_config={"config_list": config_list})Key Architectural Characteristics:

- Conversation-First: Agents communicate through structured message passing

- Role Specialization: Each agent has defined capabilities and responsibilities

- Group Dynamics: Built-in support for multi-agent collaboration

- Human-in-the-Loop: Seamless integration points for human oversight

CrewAI: The Task-Oriented Workflow Engine

CrewAI adopts a task-oriented architecture where agents are organized into crews with specific goals and workflows. The framework emphasizes structured task execution and role-based collaboration.

from crewai import Agent, Task, Crew, Process

# CrewAI's task-oriented approach

researcher = Agent(

role='Market Research Analyst',

goal='Find and analyze the latest market trends',

backstory='Expert in market analysis and trend identification',

tools=[SerperDevTool()],

verbose=True

)

writer = Agent(

role='Technical Writer',

goal='Write compelling technical content',

backstory='Experienced technical writer with deep industry knowledge',

verbose=True

)

research_task = Task(

description='Research the latest AI agent framework trends for 2025',

agent=researcher,

expected_output='Comprehensive market analysis report'

)

write_task = Task(

description='Write a technical blog post based on the research findings',

agent=writer,

expected_output='1500-word technical blog post',

context=[research_task]

)

crew = Crew(

agents=[researcher, writer],

tasks=[research_task, write_task],

process=Process.sequential

)Key Architectural Characteristics:

- Task-Driven: Workflows organized around concrete tasks with clear outputs

- Role Definition: Explicit agent roles with goals and backstories

- Process Management: Built-in workflow patterns (sequential, hierarchical)

- Context Passing: Automatic context sharing between dependent tasks

Performance Analysis and Benchmarks

Throughput and Latency

In production deployments, framework performance varies significantly based on architectural choices:

LangChain Performance Profile:

- Single-Agent Throughput: 50-100 requests/minute (depending on complexity)

- Memory Overhead: Moderate (15-25MB per agent instance)

- Tool Execution: Optimized for sequential tool usage

- Best For: High-throughput single-agent scenarios

AutoGen Performance Profile:

- Multi-Agent Throughput: 20-40 conversations/minute (scales with agent count)

- Memory Overhead: High (40-60MB per conversation group)

- Message Passing: Optimized for agent-to-agent communication

- Best For: Complex multi-agent collaboration scenarios

CrewAI Performance Profile:

- Workflow Throughput: 30-60 tasks/minute (depends on task complexity)

- Memory Overhead: Low to moderate (20-35MB per crew)

- Task Execution: Optimized for structured workflows

- Best For: Production task orchestration systems

Resource Utilization Patterns

# Resource monitoring example for production deployment

import psutil

import time

def monitor_framework_performance():

"""Monitor memory and CPU usage across frameworks"""

baseline_memory = psutil.virtual_memory().used

# LangChain execution

start_time = time.time()

# Execute LangChain agent

langchain_memory = psutil.virtual_memory().used - baseline_memory

langchain_time = time.time() - start_time

# AutoGen execution

start_time = time.time()

# Execute AutoGen conversation

autogen_memory = psutil.virtual_memory().used - baseline_memory

autogen_time = time.time() - start_time

# CrewAI execution

start_time = time.time()

# Execute CrewAI workflow

crewai_memory = psutil.virtual_memory().used - baseline_memory

crewai_time = time.time() - start_time

return {

'langchain': {'memory_mb': langchain_memory / 1024 / 1024, 'time_sec': langchain_time},

'autogen': {'memory_mb': autogen_memory / 1024 / 1024, 'time_sec': autogen_time},

'crewai': {'memory_mb': crewai_memory / 1024 / 1024, 'time_sec': crewai_time}

}Real-World Implementation Patterns

Enterprise Integration Scenarios

Customer Service Automation (LangChain)

# Multi-modal customer service agent

customer_agent = initialize_agent(

tools=[

knowledge_base_tool,

order_lookup_tool,

sentiment_analyzer_tool,

escalation_tool

],

llm=enterprise_llm,

memory=ConversationBufferWindowMemory(k=10),

agent=AgentType.STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION

)Research Team Simulation (AutoGen)

# Multi-disciplinary research team

research_team = GroupChat(

agents=[

data_scientist_agent,

domain_expert_agent,

statistician_agent,

research_writer_agent

],

messages=[],

max_round=20,

speaker_selection_method="auto"

)Content Production Pipeline (CrewAI)

# Content creation workflow

content_crew = Crew(

agents=[researcher, strategist, writer, editor],

tasks=[research_task, strategy_task, writing_task, editing_task],

process=Process.hierarchical,

manager=content_manager

)Scalability and Deployment Considerations

LangChain Scaling Patterns:

- Horizontal Scaling: Stateless agents can be load-balanced

- Vector Store Integration: Optimized for RAG architectures

- Streaming Support: Real-time response streaming for user interfaces

- Production Ready: Extensive monitoring and observability tools

AutoGen Scaling Patterns:

- Conversation Isolation: Each conversation group runs independently

- Agent Pooling: Reusable agent instances across conversations

- Memory Management: Configurable conversation history retention

- Human Oversight: Built-in mechanisms for human intervention

CrewAI Scaling Patterns:

- Workflow Parallelization: Independent tasks can run concurrently

- Resource Allocation: Granular control over agent resource usage

- Task Queuing: Built-in support for task prioritization

- Progress Tracking: Comprehensive workflow monitoring

Production Readiness and Enterprise Features

Monitoring and Observability

All three frameworks provide varying levels of production monitoring capabilities:

LangChain:

- LangSmith integration for detailed tracing

- Custom callback handlers for monitoring

- Performance metrics collection

- Error tracking and debugging tools

AutoGen:

- Conversation logging and replay

- Agent performance metrics

- Message flow visualization

- Error handling and recovery

CrewAI:

- Task execution tracking

- Workflow progress monitoring

- Agent performance analytics

- Integration with APM tools

Security and Compliance

Enterprise deployments require robust security measures:

# Security configuration example

security_config = {

"input_validation": {

"max_input_length": 10000,

"sanitization_rules": ["no_sql_injection", "no_xss"]

},

"output_filtering": {

"content_moderation": True,

"pii_detection": True

},

"access_control": {

"role_based_access": True,

"tool_permissions": {

"database_tools": ["admin", "data_scientist"],

"api_tools": ["developer", "integration_specialist"]

}

}

}Framework Selection Guidelines

When to Choose LangChain

Optimal Use Cases:

- Complex single-agent applications

- RAG (Retrieval-Augmented Generation) systems

- Applications requiring extensive tool integration

- Scenarios needing fine-grained control over agent behavior

- Projects with existing LangChain ecosystem investments

Technical Requirements:

- Modular, composable architecture

- Extensive third-party integrations

- Sophisticated memory management

- Real-time streaming capabilities

When to Choose AutoGen

Optimal Use Cases:

- Multi-agent collaboration scenarios

- Complex problem-solving requiring diverse expertise

- Human-in-the-loop workflows

- Research and analysis teams

- Applications benefiting from conversational dynamics

Technical Requirements:

- Structured agent communication

- Role specialization and collaboration

- Conversation management

- Human oversight capabilities

When to Choose CrewAI

Optimal Use Cases:

- Task-oriented workflows

- Content production pipelines

- Business process automation

- Structured team collaboration

- Production task orchestration

Technical Requirements:

- Clear task definitions and outputs

- Role-based agent organization

- Workflow management

- Progress tracking and monitoring

Future Evolution and Industry Trends

Emerging Architectural Patterns

The agent framework landscape continues to evolve with several emerging patterns:

- Hybrid Architectures: Combining elements from multiple frameworks

- Federated Learning: Distributed agent training and knowledge sharing

- Specialized Agents: Domain-specific optimizations

- Edge Deployment: Lightweight agents for resource-constrained environments

Performance Optimization Roadmap

# Future optimization techniques

optimization_roadmap = {

"model_compression": {

"quantization": "8-bit and 4-bit precision",

"pruning": "Remove redundant parameters",

"knowledge_distillation": "Smaller, faster models"

},

"inference_optimization": {

"caching": "Reuse similar computations",

"batching": "Parallel request processing",

"speculative_decoding": "Predict future tokens"

},

"system_optimization": {

"hardware_acceleration": "GPU/TPU optimization",

"memory_management": "Efficient resource usage",

"network_optimization": "Reduced latency"

}

}Conclusion: Strategic Framework Selection

Choosing between LangChain, AutoGen, and CrewAI requires careful consideration of your specific use case, technical requirements, and organizational constraints. Each framework excels in different scenarios:

- LangChain provides the most flexibility and ecosystem maturity for complex single-agent applications

- AutoGen offers superior multi-agent collaboration capabilities for team-based problem solving

- CrewAI delivers structured workflow management for production task orchestration

The optimal choice depends on your architectural priorities: composition and flexibility (LangChain), collaboration and conversation (AutoGen), or structure and task management (CrewAI). As the ecosystem matures, we expect increased interoperability and hybrid approaches that leverage the strengths of multiple frameworks.

For production deployments, consider starting with pilot projects using each framework to evaluate performance, developer experience, and operational overhead in your specific environment. The rapidly evolving nature of agent frameworks means that today’s limitations may be tomorrow’s features, making flexibility and adaptability key considerations in your architectural decisions.